Big Industries Academy

Geospatial Big Data at Big Industries PART II: How?

Recap: why GeoMesa?

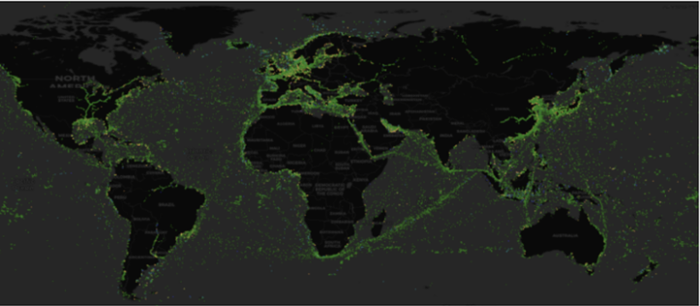

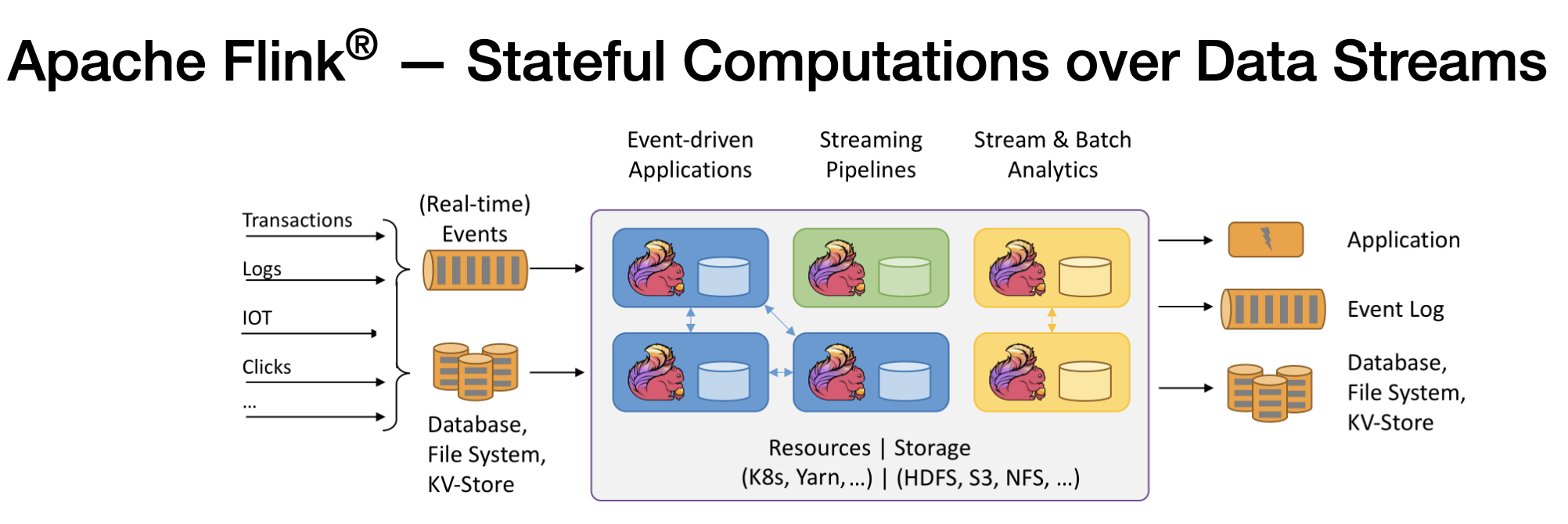

As described in an earlier post, at Big Industries we make use of GeoServer/GeoMesa to visualize/persist large volumes of geospatial data. Rather than developing their own data processing tools from scratch and selling this as a licensed black box, GeoMesa provides a set of libraries that allows users to leverage some of the most reliable tools on the market for big data processing, including Hbase, Spark, Cassandra, Redis, and Kafka. As these tools continuously evolve, GeoMesa’s releases keep up with the latest developments. As it is an open-source library, it offers full transparancy of what happens beneath the surface.

For data persistence and fast retrieval, GeoMesa relies on a smart choice of its key. It uses a published spatio-temporal indexing structure built on top of a column-family oriented distributed data store that enables efficient storage, querying, and transformation capabilities for large spatio-temporal datasets. Making use of geohashes guarantees that when querying the data, only relevant ranges will be scanned and the requested data is returned swiftly.

Correct construction of line geometries in GeoMesa-SparkSQL

Consider a problem statement where we have recordings of the coordinates (latitude/longitude) along a route.

We have the individual coordinates along the path and want to create the full route as one geometric object (a linestring). The GeoMesa documentation describes how to do this:

An example might be useful. One might expect the line geometry could be constructed as something where we first order all points chronologically:

Note that for creating the point geometry, the longitude has to be provided first (this is different than, for instance, the method used by Google Maps), followed by the latitude, and coordinates are expected to be in "decimal degrees."

Beware of order in distributed computing

At first glance, this might seem to work. After all, we have an ORDER BY statement and when looking at the results most of the constructed lines appear logical. However, in distributing computing (e.g. Spark, Hive, etc.) the order cannot be guaranteed when using a GROUP BY. When calling the GROUP BY, the data can require a shuffle and remix the order . What makes it tricky is that for much of the dataset, there is no reshuffle and the majority of the results appear to be correct. When rerunning the job for the sama dataset, the shuffling will not happen for the same records. In our case, less than 5% of the records were incorrect. An example of an erroneous path between Luxemburg and the USA where the straight line at the bottom directly connecting the two locations should not have been there:

The correct method is to replace the GROUP BY, by a windowing function. This might be common knowledge to the seasoned data engineer, but an example makes it even clearer:

When the code above is used, this leads to correct results where the order is maintained and anomalies like in the figure above will disappear.

Joris Billen

Joris comes from a computational/engineering background and is approaching 10 years of consultancy experience. His focus is on data engineering and he enjoys working at the intersection of the functional aspects of use cases and their implementation on innovative data platforms. He was part of the Big Industries team that laid the foundation for a data analytics platform on Azure for a major client in the auto industry. More recently he has been using Cloudera Hadoop in aviation and anti-fraud projects at EU Institutions and has been digging deeper into AWS. Outside of work he can be found biking, playing soccer and getting fresh air with his (expanding) family.