Big Industries Academy

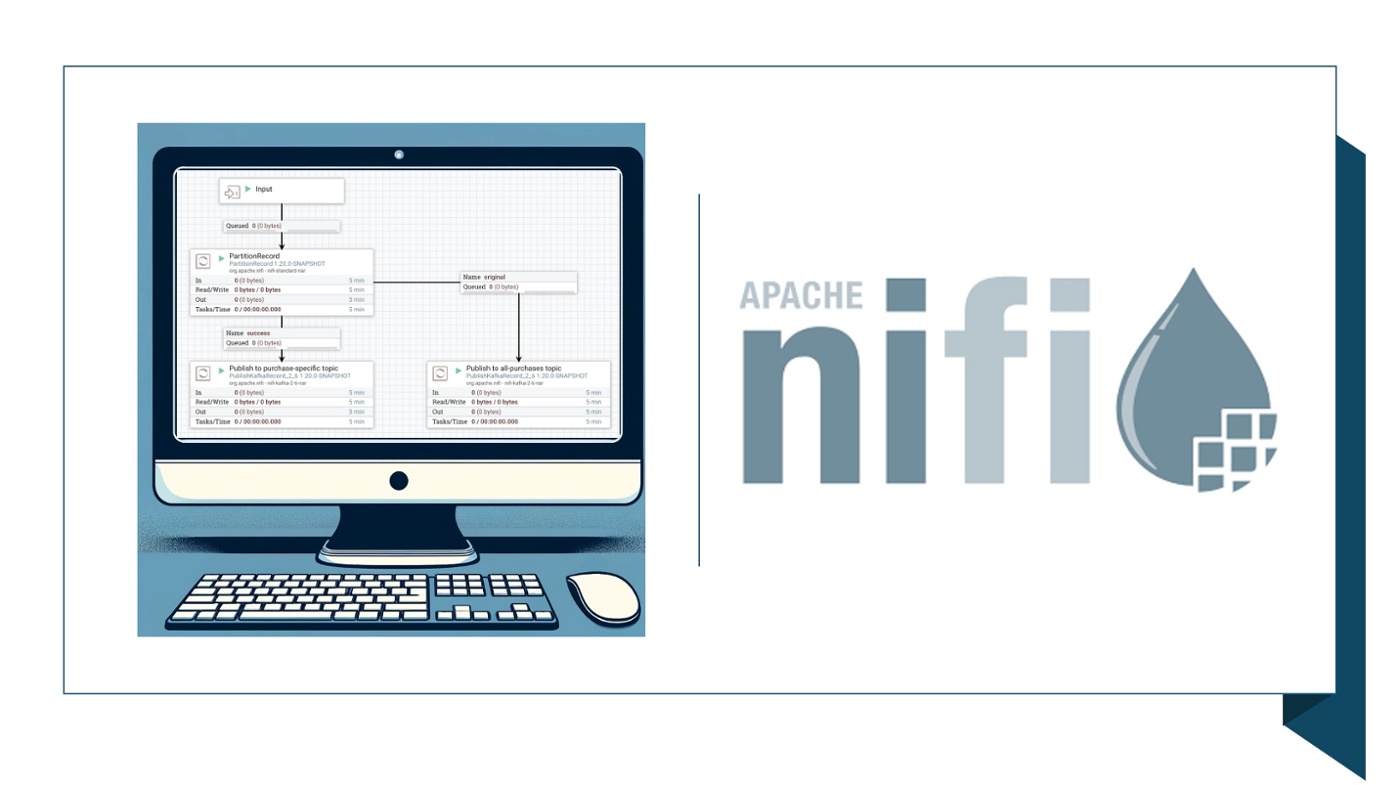

Apache NiFi Explained

Apache NiFi, a robust, scalable, and configurable data processing and distribution system, has...

Big Industries Academy

Kudos to Muthiah

If you grow as an employee, the company grows as well. That’s why we decided to setup the Big...

General

Life at Big Industries: Interview with Morad

Interview with Morad on how it is working for Big Industries? How important are the values Share...

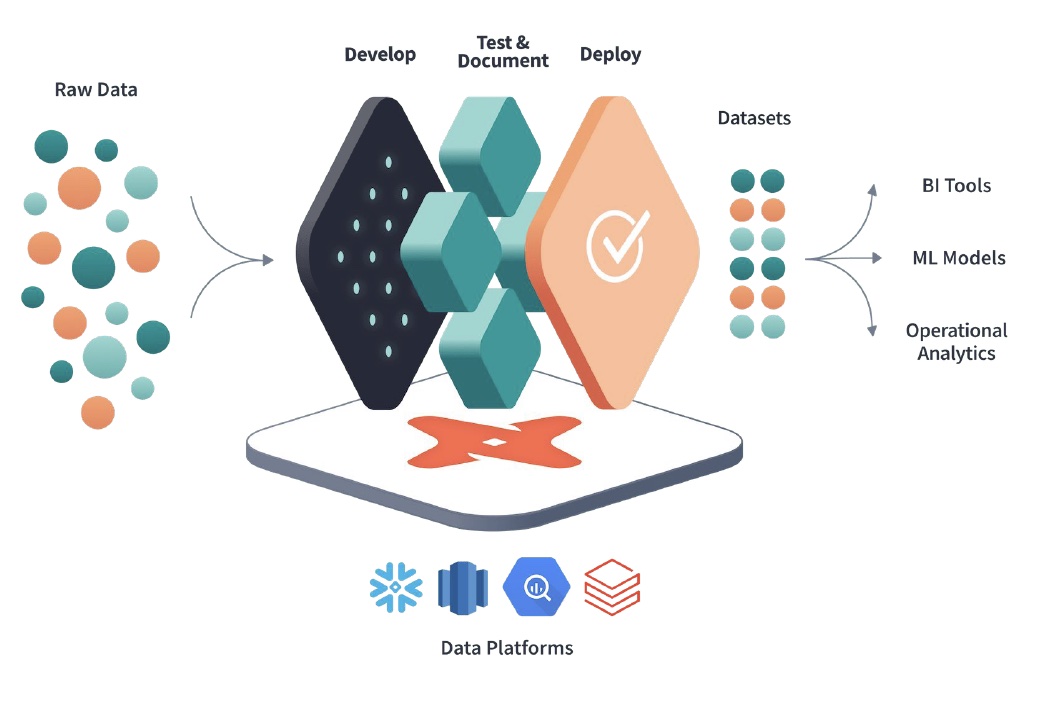

DBT

Big Industries partners with DBT Labs to empower Data Teams

A new collaboration that will enable Big Industries clients to leverage the power of DBT, the...

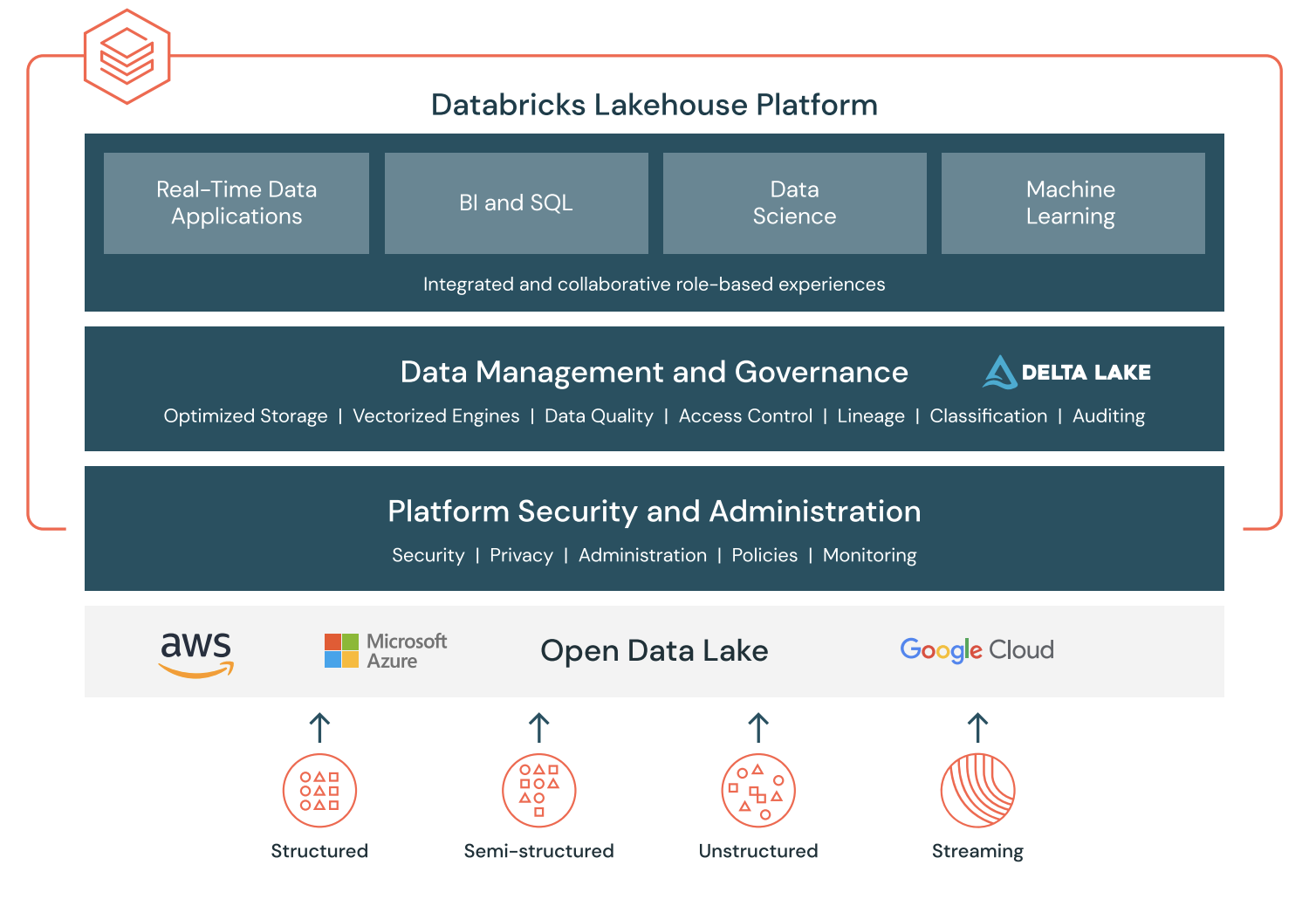

Databricks

Databricks: The Power of Delta Lake and Lakehouse Architecture

Big Industries recently entered into a partnership with Databricks, the leading cloud-based Data...

General

A Warm Welcome to Jutter Peeters and Ali El Khattabi

Congratulations on being part of Big Industries! The whole team welcomes you and we look forward...

General

A Warm Welcome to Andreia Negreira

Congratulations on being part of Big Industries! The whole team welcomes you and we look forward...

General

Life at Big Industries: Interview with Ruben

Interview with Ruben on how it is working for Big Industries? How important are the values Share...

General

Happy New Year from Big Industries

Dear Employees and Customers,As the clock strikes midnight, we say goodbye to an incredible year...

Big Industries Academy

Workshop Operationalizing the Machine Learning Pipeline

Data Scientists andML Practitionersneed more than a Jupyter notebook to build, test, and deploy...