AWS sample data Platform Architectures

Many Amazon Web Services (AWS) customers require a data storage and analytics solution that offers more agility and flexibility than traditional data management systems. A data lake is a new and increasingly popular way to store and analyze data because it allows companies to manage multiple data types from a wide variety of sources, and store this data, structured and unstructured, in a centralized repository.

The AWS Cloud provides many of the building blocks required to help customers implement a secure, flexible, and cost-effective data lake. These include AWS managed services that help ingest, store, find, process, and analyze both structured and unstructured data. To support our customers as they build data lakes, AWS offers the data lake solution, which is an automated reference implementation that deploys a highly available, cost-effective data lake architecture on the AWS Cloud along with a user-friendly console for searching and requesting datasets.

The following figures illustrate sample simplified AWS Data Lake Architectures.

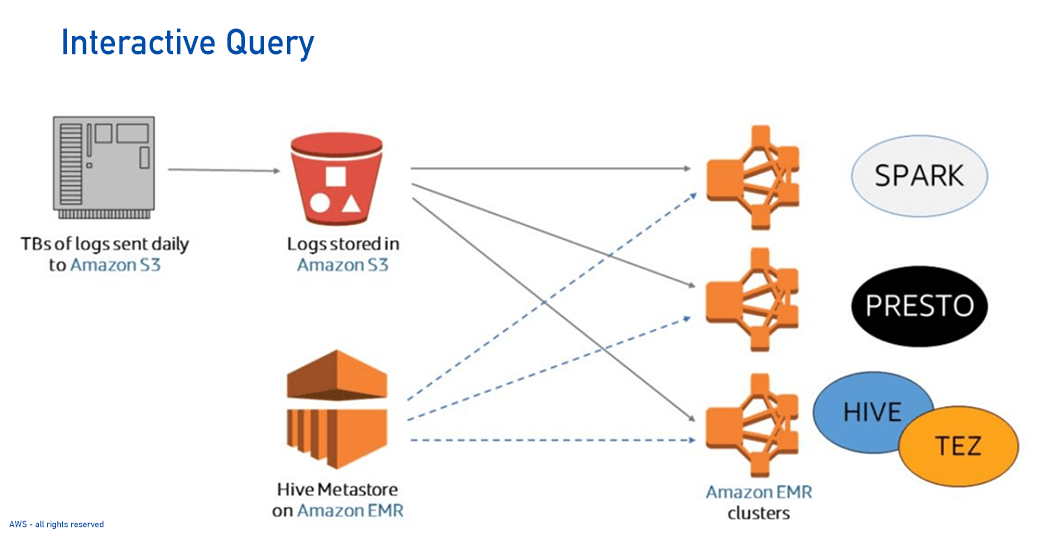

This example ingests terabytes of logs once every day into Amazon S3. These logs are loaded into Amazon EMR clusters for processing using Hadoop/YARN, Spark, Presto, Hive, TEZ, or any similar query-oriented solution. In instances where a metastore is used, such as with Hive, this metadata is loaded onto the clusters in order to determine how to define and map the data to tables. Then, queries can be run against these tables by the end user

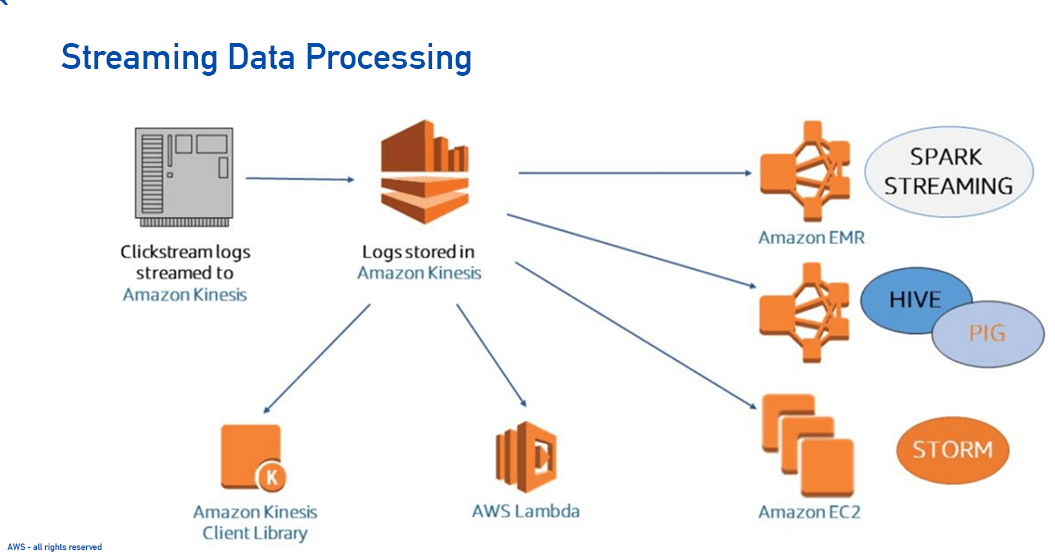

In this example, clickstream logs are processed in real time using Amazon Kinesis. First, they're loaded into Amazon Kinesis using the Amazon Kinesis Producer Library. After this data has been adequately prepared, it can then be loaded into a variety of options for processing, such as the Amazon Kinesis Client Library, AWS Lambda, and Amazon EMR/EC2 environments running Hadoop or other third-party solutions.

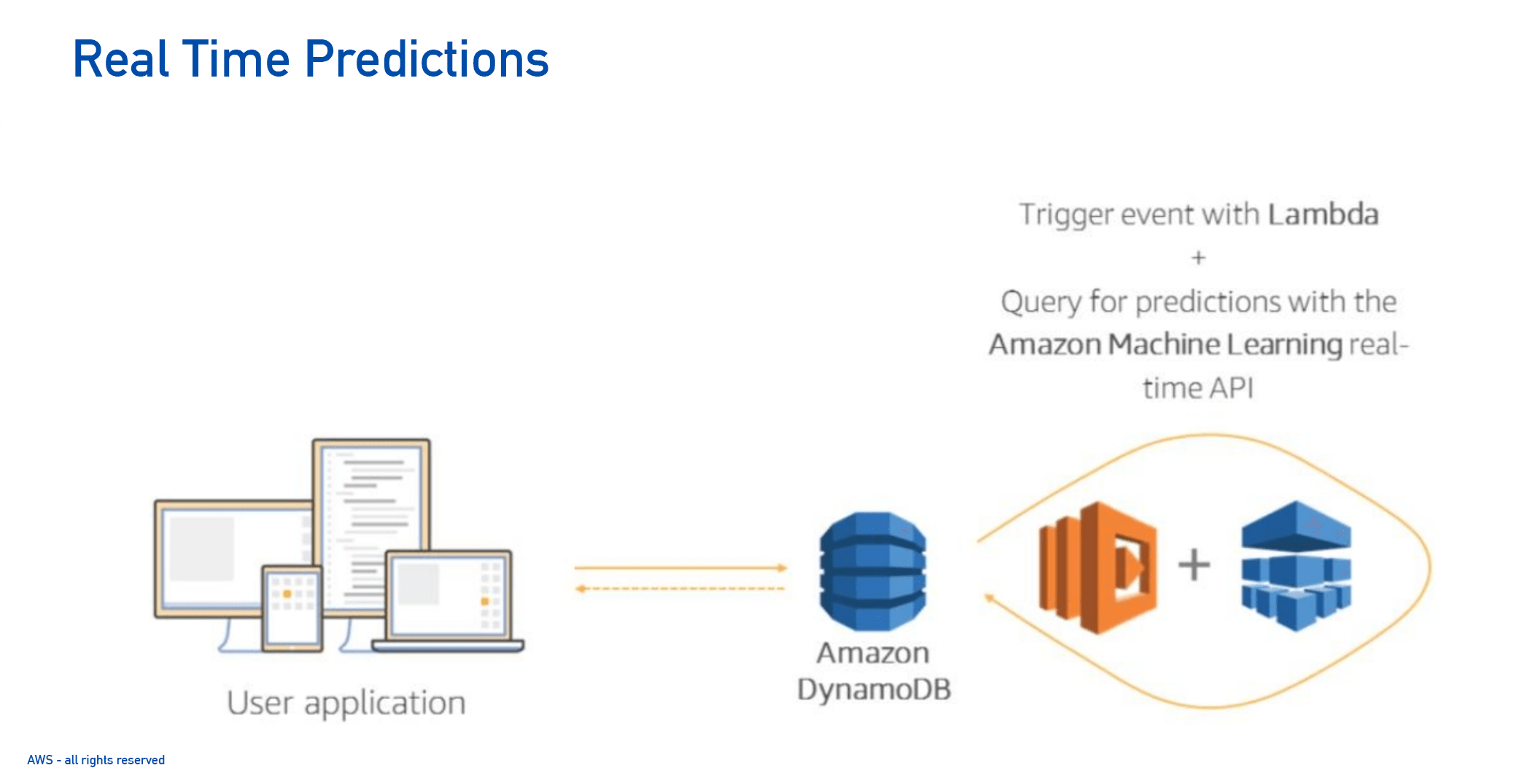

This example focuses on real-time predictive analytics with AWS. In this example, the app writes to a DynamoDB table, which generates an event. The event triggers a Lambda function that initiates an analytic query using the Amazon Machine Learning real-time API. That prediction is stored back in DynamoDB and is available for the application to read. A useful example for this is a factory or a warehouse where sensor data tracked in DynamoDB is used to anticipate potential safety or productivity issues. Predicting these issues might require more than simply monitoring for a specific condition or set of conditions; if so, Amazon Machine Learning could be used to find patterns in the data and predict catastrophes before they occur.

Need help with your AWS data platform project?

We are here to help, we look forward hearing from you.